It’s hard to be a fan of Twitter right now. The company is sticking up for conspiracy theorist Alex Jones, when nearly all other platforms have given him the boot, it’s overrun with bots, and now it’s breaking users’ favorite third-party Twitter clients like Tweetbot and Twitterific by shutting off APIs these apps relied on. Worse still, is that Twitter isn’t taking full responsibility for its decisions.

In a company email it shared today, Twitter cited “technical and business constraints” that it can no longer ignore as being the reason behind the APIs’ shutdown.

It said the clients relied on “legacy technology” that was still in a “beta state” after more than 9 years, and had to be killed “out of operational necessity.”

This reads like passing the buck. Big time.

It’s not as if there’s some other mysterious force that maintains Twitter’s API platform, and now poor ol’ Twitter is forced to shut down old technology because there’s simply no other recourse. No.

Twitter, in fact, is the one responsible for its User Streams and Site Streams APIs – the APIs that serve the core functions of these now deprecated third-party Twitter clients. Twitter is the reason these APIs have been stuck in a beta state for nearly a decade. Twitter is the one that decided not to invest in supporting those legacy APIs, or shift them over to its new API platform.

And Twitter is the one that decided to give up on some of its oldest and most avid fans – the power users and the developer community that met their needs – in hopes of shifting everyone over to its own first-party clients instead.

The company even refused to acknowledge how important these users and developers have been to its community over the years, by citing the fact that the APIs it’s terminating – the ones that power Tweetbot, Twitterrific, Tweetings and Talon – are only used by “less than 1%” of Twitter developers. Burn!

Way to kick a guy when he’s already down, Twitter.

But just because a community is small in numbers, does not mean its voice is not powerful or its influence is not felt.

Hence, the #BreakingMyTwitter hashtag, which Twitter claims to be watching “quite often.”

The one where users are reminding Twitter CEO Jack Dorsey about that time he apologized to Twitter developers for not listening to them, and acknowledged the fact they made Twitter what it is today. The time when he promised to do better.

This is…not better:

The company’s email also says it hopes to eventually learn “why people hire 3rd party clients over our own apps.”

Its own apps?

Oh, you mean like TweetDeck, the app Twitter acquired then shut down on Android, iPhone and Windows? The one it generally acted like it forgot it owned? Or maybe you mean Twitter for Mac (previously Tweetie, before its acquisition), the app it shut down this year, telling Mac users to just use the web instead? Or nearly maybe you mean the nearly full slate of TV apps that Twitter decided no longer needed to exist?

And Twitter wonders why users don’t want to use its own clients?

Or perhaps, users want a consistent experience – one that doesn’t involve a million inconsequential product changes like turning stars to hearts or changing the character counter to a circle. Maybe they appreciate the fact that the third parties seem to understand what Twitter is better than Twitter itself does: Twitter has always been about a real-time stream of information. It’s not meant to be another Facebook-style algorithmic News Feed. The third-party clients respect that. Twitter does not.

Yesterday, the makers of Twitterific spoke to the API changes, noting that its app would no longer be able to stream tweets, send native push notifications, or be able to update its Today view, and that new tweets and DMs will be delayed.

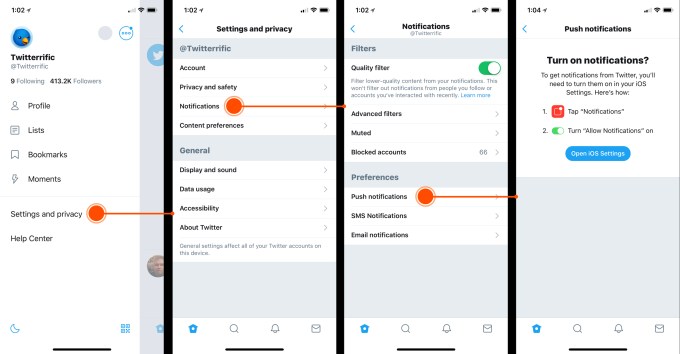

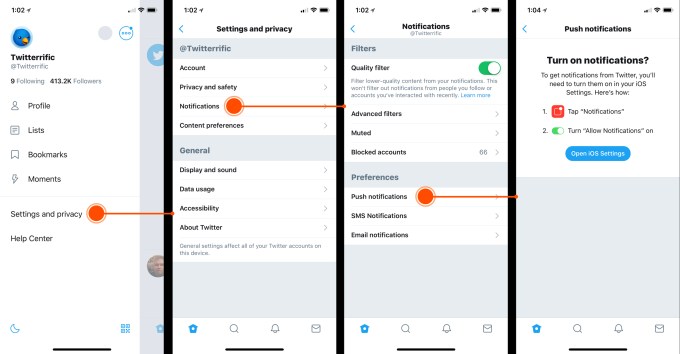

It recommended users download Twitter’s official mobile app for notifications going forward.

In other words, while Twitterific will hang around in its broken state, its customers will now have to run two Twitter apps on their device – the official one to get their notifications, and the other because they prefer the experience.

A guide to using Twitter’s app for notifications, from Iconfactory

“We understand why Twitter feels the need to update its API endpoints,” explains Iconfactory co-founder Ged Maheux, whose company makes Twitterrific. “The spread of bots, spam and trolls by bad actors that exploit their systems is bad for the entire Twitterverse, we just wish they had offered an affordable way forward for the developers of smaller, third party apps like ours.”

“Apps like the Iconfactory’s Twitterrific helped build Twitter’s brand, feature sets and even its terminology into what it is today. Our contributions were small to be sure, but real nonetheless. To be priced out of the future of Twitter after all of our history together is a tough pill to swallow for all of us,” he added.

The question many users are now facing is what to do next?

Continue to use now broken third-party apps? Move to an open platform like Mastodon? Switch to Twitter’s own clients, as it wants, where it plans to “experiment with showing alternative viewpoints” to pop people’s echo chambers…on a service that refuses to kick out people like Alex Jones?

Or maybe it’s time to admit the open forum for everything that Twitter – and social media, really – has promised is failing? Maybe it’s time to close the apps – third-party and otherwise. Maybe it’s time to go dark. Get off the feeds. Take a break. Move on.

The full email from Twitter is below:

Hi team,

Today, we’re publishing a blog post about our priorities for where we’re investing today in Twitter client experiences. I wanted to share some more with you about how we reached these decisions, and how we’re thinking about 3rd party clients specifically.

First, some history:

3rd party clients have had a notable impact on the Twitter service and the products we build. Independent developers built the first Twitter client for Mac and the first native app for iPhone. These clients pioneered product features we all know and love about Twitter, like mute, the pull-to-refresh gesture, and more.

We love that developers build experiences on our APIs to push our service, technology, and the public conversation forward. We deeply respect the time, energy, and passion they’ve put into building amazing things using Twitter.

But we haven’t always done a good job of being straightforward with developers about the decisions we make regarding 3rd party clients. In 2011, we told developers (in an email) not to build apps that mimic the core Twitter experience. In 2012, we announced changes to our developer policies intended to make these limitations clearer by capping the number of users allowed for a 3rd party client. And, in the years following those announcements, we’ve told developers repeatedly that our roadmap for our APIs does not prioritize client use cases — even as we’ve continued to maintain a couple specific APIs used heavily by these clients and quietly granted user cap exceptions to the clients that needed them.

It is now time to make the hard decision to end support for these legacy APIs — acknowledging that some aspects of these apps would be degraded as a result. Today, we are facing technical and business constraints we can’t ignore. The User Streams and Site Streams APIs that serve core functions of many of these clients have been in a “beta” state for more than 9 years, and are built on a technology stack we no longer support. We’re not changing our rules, or setting out to “kill” 3rd party clients; but we are killing, out of operational necessity, some of the legacy APIs that power some features of those clients. And it has not been a realistic option for us today to invest in building a totally new service to replace these APIs, which are used by less than 1% of Twitter developers.

We’ve heard the feedback from our customers about the pain this causes. We check out #BreakingMyTwitter quite often and have spoken with many of the developers of major 3rd party clients to understand their needs and concerns. We’re committed to understanding why people hire 3rd party clients over our own apps. And we’re going to try to do better with communicating these changes honestly and clearly to developers. We have a lot of work to do. This change is a hard, but important step, towards doing it. Thank you for working with us to get there.

Thanks,

Rob

Indeed, Credit Karama Chief Product Officer

Indeed, Credit Karama Chief Product Officer