Google has released its first diversity report since the infamous James Damore memo and the fallout that resulted from it. Those are both long stories but the TL;DR is that Damore said some sexist things in a memo that went viral. He got fired and then sued Google for firing him. That lawsuit, however, was shot down by the National Labor Relations Board in February. Then, it turned out another employee, Tim Chevalier, alleges he was fired for advocating for diversity, as reported by Gizmodo later that month. Now, Chevalier is suing Google.

“I was retaliated against for pointing out white privilege and sexism as they exist in the workplace at Google and I think that’s wrong,” Chevalier told TechCrunch few months ago about why he decided to sue. “I wanted to be public about it so that the public would know about what’s going on with treatment of minorities at Google.”

In court, Google is trying to move the case into arbitration. Earlier this month, Google’s attorney said Chevalier previously “agreed in writing to arbitrate the claims asserted” in his original complaint, according to court documents filed June 11, 2018.

Now that I’ve briefly laid out the state of diversity and inclusion at Google, here’s the actual report, which is Google’s fifth diversity report to date and by far the most comprehensive. For the first time, Google has provided information around employee retention and intersectionality.

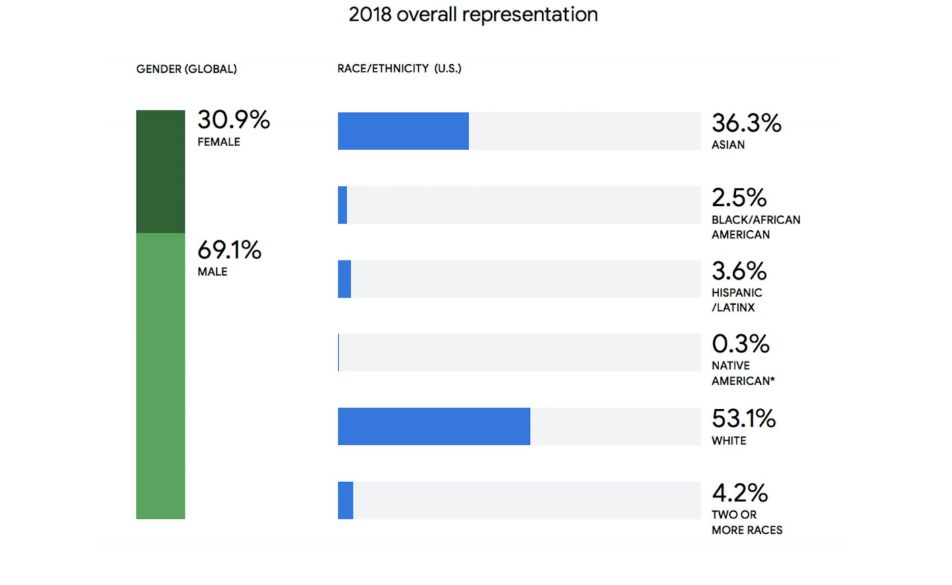

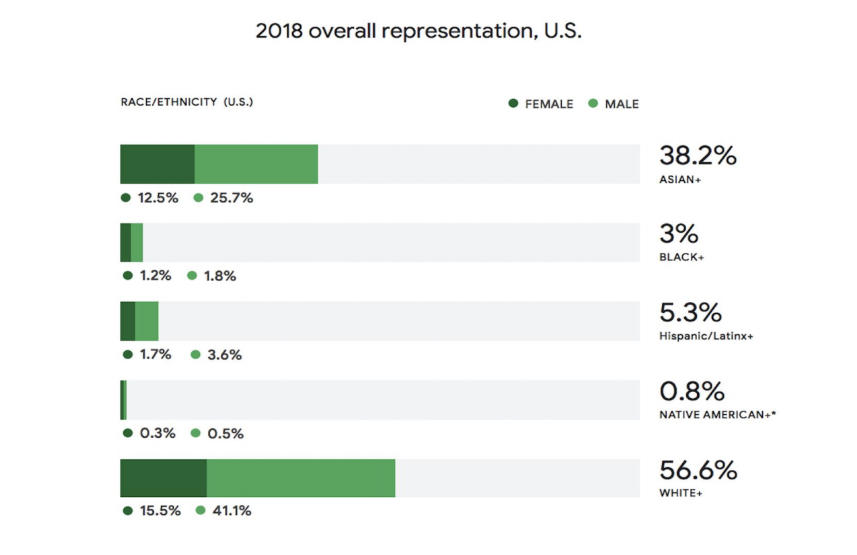

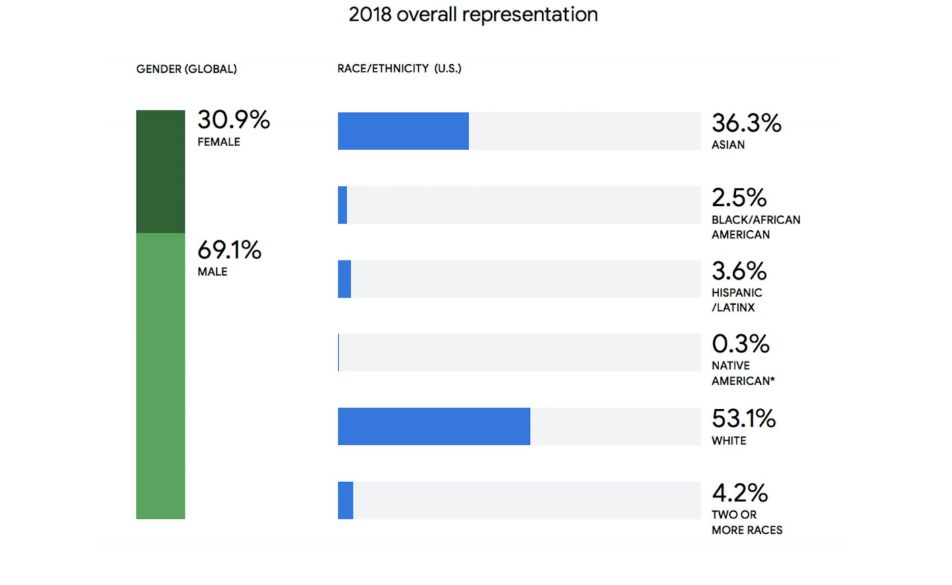

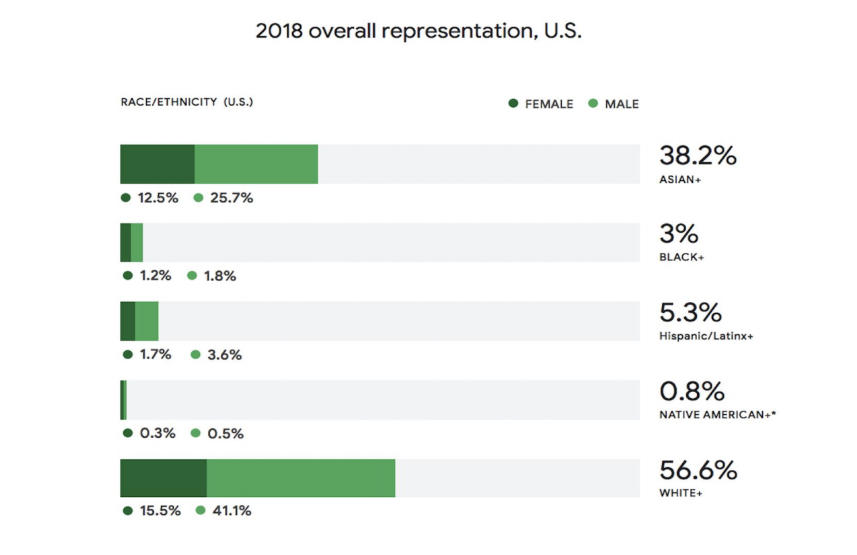

First, here are some high-level numbers:

- 30.9 percent female globally

- 2.5 percent black in U.S.

- 3.6 percent Latinx In U.S.

- 0.3 percent Native American in U.S.

- 4.2 percent two or more races in U.S.

Google also recognizes its gender reporting is “not inclusive of our non-binary population” and is looking for the best way to measure gender moving forward. As Google itself notes, representation for women, black and Latinx people has barely increased, and for Latinx representation, it’s actually gotten worse. Last year, Google was 31 percent female, two percent black and four percent Latinx.

At the leadership level, Google has made some progress year over year, but the company’s higher ranks are still 74.5 percent male and 66.9 percent white. So, congrats on the progress but please do better next time because this is not good enough.

Moving forward, Google says its goal is to reach or exceed the available talent pool in terms of underrepresented talent. But what that would actually look like is not clear. In an interview with TechCrunch, Google VP of Diversity and Inclusion Danielle Brown told me Google looks at skills, jobs and census data around underrepresented groups graduating with relevant degrees. Still, she said she’s not sure what the representation numbers would look like if Google achieved that. In response to what a job well done would look like, Brown said:

You know as well as we do that it’s a long game. Do we ever get to good? I don’t know. I’m optimistic we’ll continue to make progress. It’s not a challenge we’ll solve over night. It’s quite systemic. Despite doing it for a long time, my team and I remain really optimistic that this is possible.

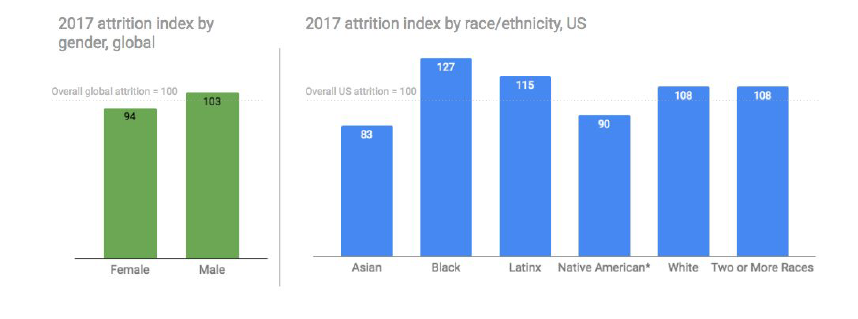

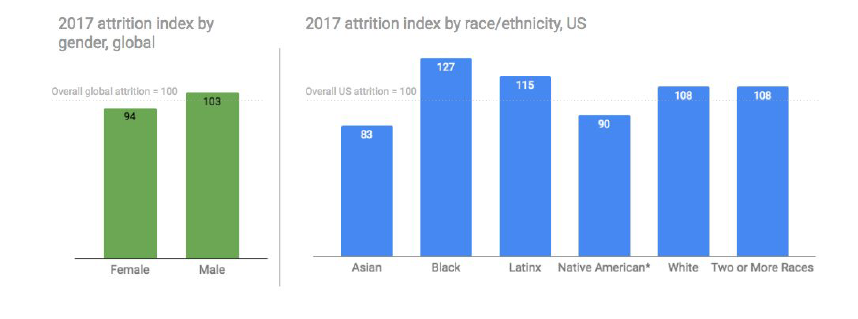

As noted above, Google has also provided data around attrition for the first time. It’s no surprise — to me, at least — that attrition rates for black and Latinx employees were the highest in 2017. To be clear, attrition rates are an indicator of how many people leave a company. When one works at a company that has so few black and brown people in leadership positions, and at the company as a whole, the unfortunate opportunity to be the unwelcome recipient of othering, micro-aggressions, discrimination and so forth are plentiful.

“A clear low light, obviously, in the data is the attrition for black and Latinx men and women in the U.S.,” Brown told TechCrunch. “That’s an area where we’re going to be laser-focused.”

She added that some of Google’s internal survey data shows employees are more likely to leave when they report feeling like they’re not included. That’s why Google is doing some work around ally training and “what it means to be a good ally,” Brown told me.

“One thing we’ve all learned is that if you stop with unconscious bias training and don’t get to conscious action, you’re not going to get the type of action you need,” she said.

From an attrition stand point, where Google is doing well is around the retention of women versus men. It turns out women are staying at Google at higher rates than men, across both technical and non-technical areas. Meanwhile, Brown has provided bi-weekly attrition numbers to Google CEO Sundar Pichai and his leadership team since January in an attempt to intervene in potential issues before they become bigger problems, she said.

via Google: Attrition figures have been weighted to account for seniority differences across demographic groups to ensure a consistent baseline for comparison.

As noted above, Google for the first time broke out information around intersectionality. According to the company’s data, women of all races are less represented than men of the same race. That’s, again, not surprising. While Google is 3 percent black, just 1.2 percent of its black population is female. And Latinx women make up just 1.7 percent of Google’s 5.3 percent Latinx employee base. That means, as Google notes, the company’s gains in representation of women has “largely been driven by” white and Asian women.

Since joining Google last June from Intel, Brown has had a full plate. Shortly after the Damore memo went viral in August — just a couple of months after Brown joined — Brown said “part of building an open, inclusive environment means fostering a culture in which those with alternative views, including different political views, feel safe sharing their opinions. But that discourse needs to work alongside the principles of equal employment found in our Code of Conduct, policies, and anti-discrimination laws.”

Brown also said the document is “not a viewpoint that I or this company endorses, promotes or encourages.”

Today, Brown told me the whole anti-diversity memo was “an interesting learning opportunity for me to understand the culture and how some Googlers view this work.”

“I hope what this report underscores is our commitment to this work,” Brown told me. “That we know we have a systemic and persistent challenge to solve at Google and in the tech industry.”

Brown said she learned “not every employee is going to agree with Google’s viewpoint.” Still, she does want employees to feel empowered to discuss either positive or negative views. But “just like any workplace, that does not mean anything goes.”

When someone doesn’t follow Google’s code of conduct, she said, “we have to take it very seriously” and “try to make those decisions without regard to political views.”

Megan Rose Dickey’s PGP fingerprint for email is: 2FA7 6E54 4652 781A B365 BE2E FBD7 9C5F 3DAE 56BD