More posts by this contributor

March and April saw fresh VC in Africa, mobile money merge with social media, motorcycle taxis digitize, and TechCrunch in Nigeria and Ghana.

Africa’s Talking with more VC

Business APIs on the continent got a boost from global venture capital thanks to an $8.6 million round for Africa’s Talking—a Kenyan based enterprise software company.

The new financing was led by IFC Venture Capital, with participation from Orange Digital Ventures and Social Capital.

Africa’s Talking works with developers to create solution focused APIs across SMS, voice, payment, and airtime services. The company has a network of 20,000 software developers and 1000 clients including solar power venture M-Kopa and financial conglomerate Ecobank.

Africa’s Talking operates in Kenya, Uganda, Rwanda, Malawi, Nigeria, Ethiopia, and Tanzania and maintains a private cloud space in London. Revenues come primarily from fees on a portion of the transactional business its solutions generate.

The company plans to use the round to hire in Nairobi. It will also expand in other African geographies and invest in IoT, analytics, payments, and cloud offerings.

CEO Samuel Gikandi and IFC Ventures’ Wale Ayeni offered insight on the round in this TechCrunch feature.

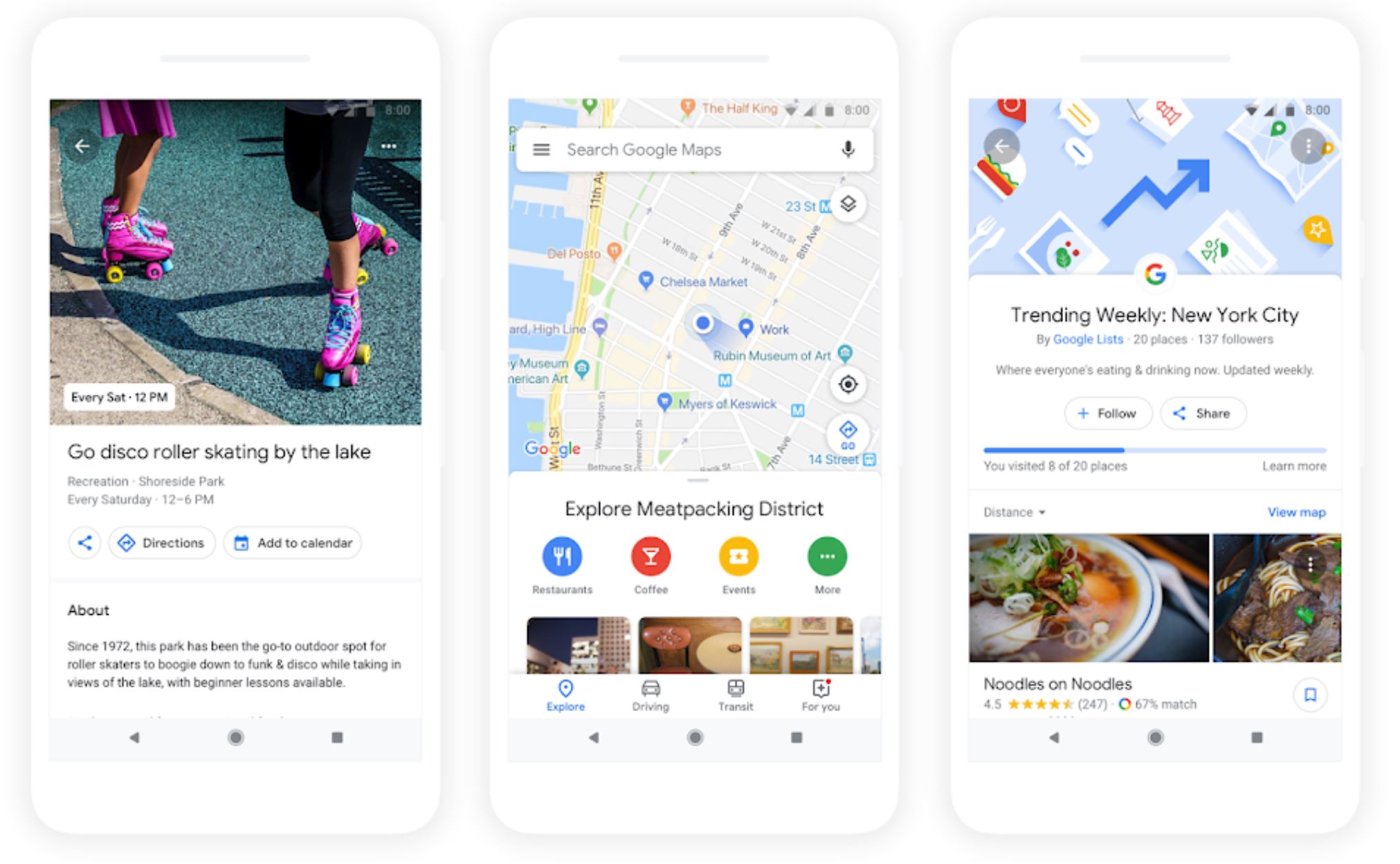

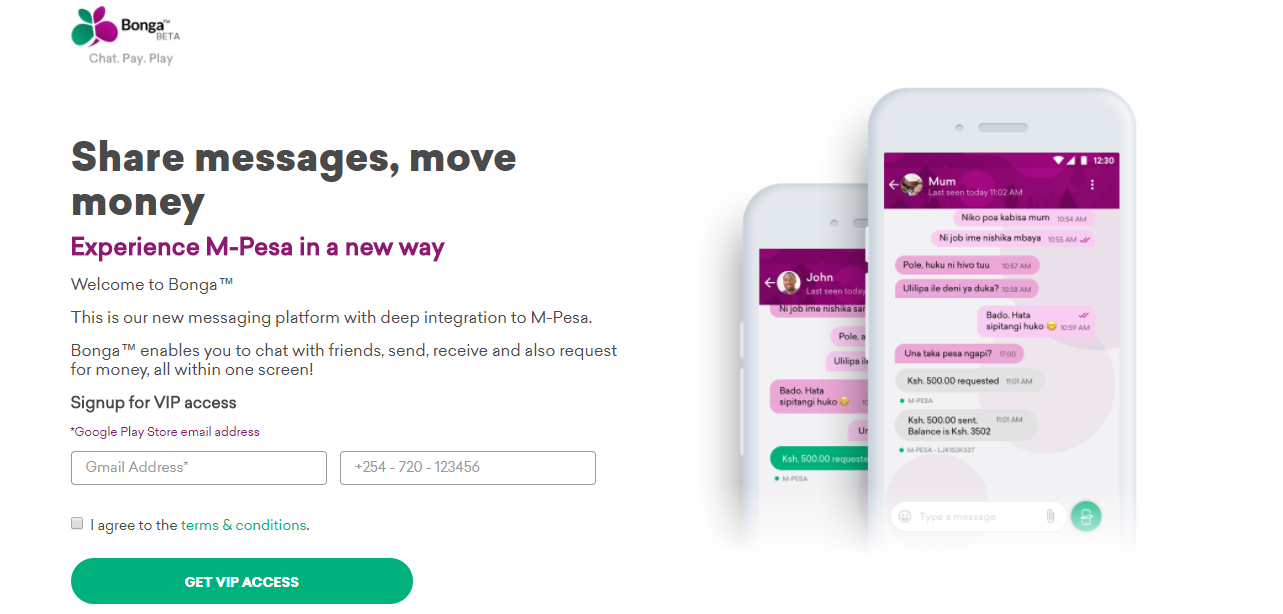

M-Pesa the social network

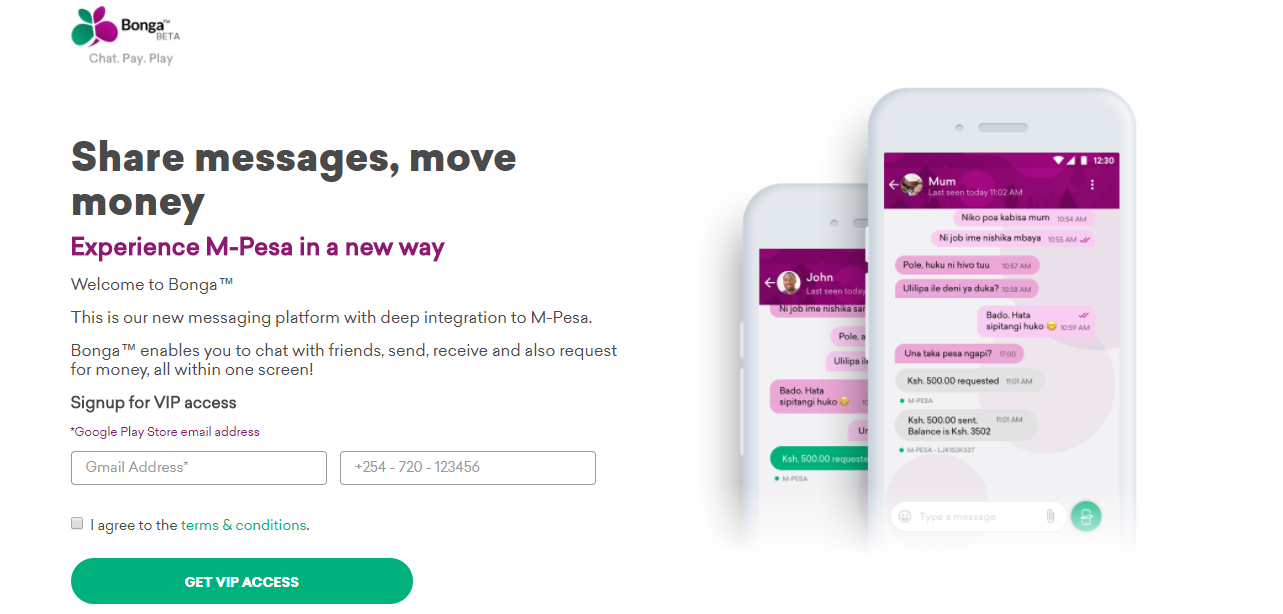

Kenya’s largest telco, Safaricom, rolled out a new social networking platform called Bonga, to augment its M-Pesa mobile money product.

“Bonga is a conversational and transactional social network,” Shikoh Gitau, Alpha’s Head of Products, told TechCrunch.

The new platform an outgrowth of the Safaricom’s Alpha innovation incubator.

“[Bonga’s] focused on pay, play, and purpose…as the three main things our research found people do on our payment and mobile network,” Gitau said—naming corresponding examples of e-commerce, gaming, and Kenya’s informal harambee savings groups.

Safaricom offered Bonga to a test group of 600 users and will soon allow that group to refer it to friends as part of a three-phase rollout.

The platform will channel Facebook, YouTube, iTunes, PayPal, and eBay. Users will be able to create business profiles parallel to their social media profiles and M-Pesa accounts to sell online. Bonga will also include space for Kenya’s creative class to upload, shape, and distribute artistic products and content.

Safaricom may take Bonga to other M-Pesa markets: currently 10 in Europe, Africa, and South Asia. The Bonga announcement came shortly after Safaricom announced a money-transfer deal with PayPal.

TLcom’s $5M for Terragon

Venture firm TLcom Capital made a $5 million investment in Nigerian-based Terragon Group, the developer of a software analytics service for customer acquisition.

Located in Lagos, Terragon’s software services give clients — primarily telecommunications and financial services companies — data on Africa’s growing consumer markets.

Products allow users to drill down on multiple combinations of behavioral and demographic information and reach consumers through video and SMS campaigns while connecting to online sales and payments systems, according to the company.

Terragon has a team of 100 across Nigeria, Kenya, Ghana and South Africa and clients across consumer goods, financial services, gaming, and NGOs.

The company generates revenue primarily on transaction facilitation for its clients. Terragon bootstrapped itself into the black with revenues of $4 and $5 million dollars a year, CEO Elo Umeh told TechCrunch

Uber and Taxify’s motorcycle move

Global ride-hailing rivals Taxify and Uber launched motorcycle passenger service in East Africa. Customers of both companies in Uganda and Taxify riders in Kenya can now order up two-wheel transit by app.

The moves come as Africa’s moto-taxis — commonly known as boda bodas in the East and okadas in the West—upshift to digital.

Taxify’s app now includes a “Boda” button and Uber’s a “uberBoda” icon to order up a two-wheel taxi transit via phone in respective countries.

Other players in Africa’s motorcycle ride-hail market include Max.ng in Nigeria, SafeBoda in Uganda, and SafeMotos and Yego Moto in Rwanda.

Both Taxify and Uber look to expand motorcycle taxi service in other African countries—full story here at TechCrunch.

TechCrunch in Nigeria and Ghana

And finally, TechCrunch visited Nigeria and Ghana in April. We hosted meet and greets at several hubs and talked with dozens of startup heads—including a few BFX Africa 2017 alumns, such as Agrocenta at Impact Hub Accra and FormPlus in Lagos. TechCrunch is scouting African startups to include in the global Startup Battlefield competitions. Applications to participate in Startup Battlefield at Disrupt San Francisco are open at apply.techcrunch.com.

More Africa Related Stories @TechCrunch

- DFS Lab is helping the developing world bootstrap itself with fintech

African Tech Around the Net