As had been previously rumored, Google introduced a revamped version of Google News at its I/O developer conference today. The A.I.-powered, redesigned news destination combines elements found in Google’s digital magazine app, Newsstand, as well as YouTube, and introduces new features like “newscasts” and “full coverage” to help people get a summary or a more holistic view of a news story.

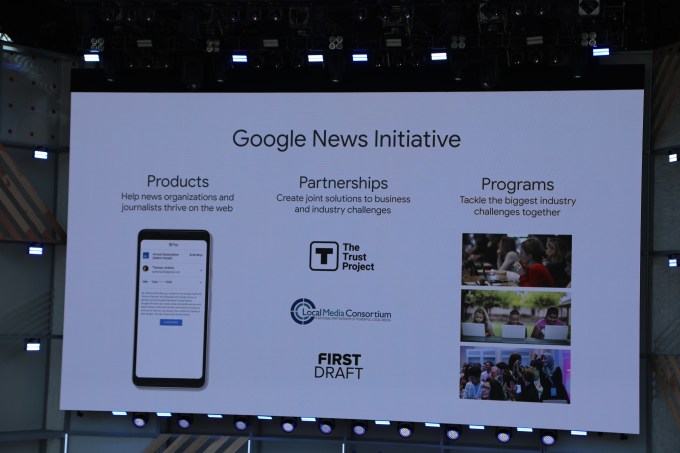

Google CEO Sundar Pichai spoke of the company’s responsibility to present accurate information to users who seek out the news on its platform, and how it could leverage A.I. technology to help with that.

The updated Google News will do three things, the company says: allow users to keep up with the news they care about, understand the full story, and enjoy and support the publishers they trust.

On the first area of focus – keeping up with the news – the updated version of Google News will present a briefing at the top of the page with the five stories you need to know about right now, as well as more stories selected just for you.

The feature uses A.I. technology to read the news on the web, to assemble the key things you need to know about, including also local news and events in your area. And the more you use this personalized version of Google News, the better it will get, thanks to the “reinforcement learning” technology under the hood.

However, you can also tell Google News what you want to see more or less of, in terms of both topics and publications.

In addition to the personalization and A.I.-driven news selection, the revamped Google News looks different, too. The site has been updated to use Google’s Material Design language, which makes it fit in better with Google’s other products, and it puts a heavier emphasis on photos and videos – including those from YouTube.

Another new feature called “Newscasts” will help users get a feel for a story through short-form summaries presented in a card-style design you can flip through.

If you want to learn more, you can dive in more deeply to stories through the “Full Coverage” feature, which is also launching along with the redesign.

Full Coverage largely aims to help users get a better perspective on news – that is, pop their filter bubbles by presenting news from multiple sources. It also aggregates coverage into “opinion,” “analysis” and “fact checks.” There were labels Google News was already using in the older version of the site, but are now much more prominent as they appear as section titles.

Full Coverage will also include a timeline of events, so you can get a sense of the history of what’s being reported.

As Google News PM Trystan Upstill explained, “having a productive conversation or debate requires everyone to have access to the same information.” That seems to be a bit of a swipe at Facebook, and the way it allowed fake news to propagate across its social network.

In another competitive move against Facebook, Google announced an easier way for users to subscribe to publisher content through a new “Subscribe with Google” option rolling out in the coming weeks.

The process of subscribing will leverage users’ Google account, and the payment information they already have on file. Then, the paid content becomes available across Google platforms, including Google News, Google Search and publishers’ own websites.

And Google News will integrate Newsstand, offering over 1,0000 magazine titles you can follow by tapping a star icon, or subscribing to.

The changes come at a time when Apple is reportedly prepping a premium news subscription service, based on the technology from Texture, the digital newsstand business it bought in March. Notably, it also arrives amid serious concerns among publishers about Facebook’s role in the media business, not only because of fake news, but also its methods of ranking content, among other things.

“Google’s new News app is rolling out to Android, iOS and Web in 127 countries starting today,” said Upstill. “We know getting accurate and timely information into people’s hands and supporting high quality journalism is more important than it has ever been right now.”

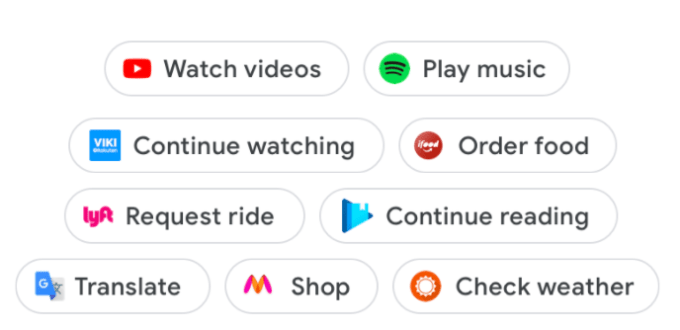

Slices is also meant to get users to interact more with the apps they have already installed, but the overall premise is a bit different from App Actions. Slices essentially provide users with a mini snippet of an app and they can appear in Google Search and the Google Assistant. From the developer’s perspective, they are all about driving the usage of their apps, but from the user’s perspective, they look like an easy way to get something done quickly.

Slices is also meant to get users to interact more with the apps they have already installed, but the overall premise is a bit different from App Actions. Slices essentially provide users with a mini snippet of an app and they can appear in Google Search and the Google Assistant. From the developer’s perspective, they are all about driving the usage of their apps, but from the user’s perspective, they look like an easy way to get something done quickly. “This radically changes how users interact with the app,” Cuthbertson said. She also noted that developers obviously want people to use their app, so every additional spot where users can interact with it is a win for them.

“This radically changes how users interact with the app,” Cuthbertson said. She also noted that developers obviously want people to use their app, so every additional spot where users can interact with it is a win for them.