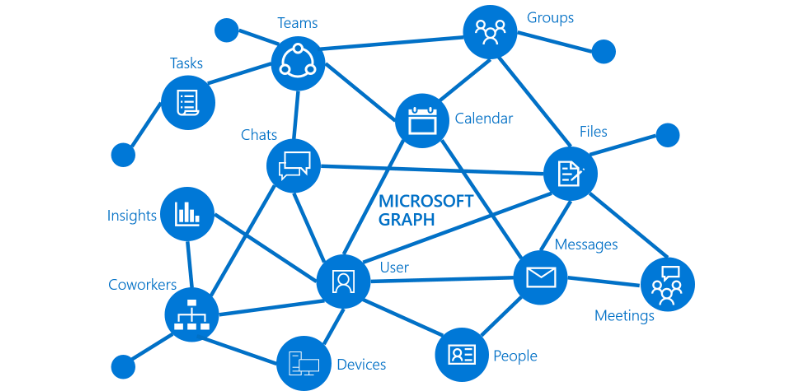

The Microsoft Graph is an interesting but also somewhat amorphous idea. It’s core to the company’s strategy, but I’m not sure most developers understand its potential just yet. Maybe it’s no surprise that Microsoft is putting quite a bit of emphasis on the Graph during its Build developer conference this week. Unless developers make use of the Graph, which is the API that provides the connectivity between everything from Windows 10 to Office 365, it won’t reach its potential, after all.

Microsoft describes the Graph as “the API for Microsoft 365.” And indeed, Microsoft 365 is the second topic the company is really hammering home during its event. It’s a combined subscription service for Office 365, Windows 10 and the company’s enterprise mobility services.

“Microsoft 365 is where the world gets its best work done,” said Microsoft corporate vice president Joe Belfiore. “With 135 million commercial monthly active users of Office 365 and nearly 700 million Windows 10 connected devices, Microsoft 365 helps developers reach people how and where they work.”

Leaving the standard keynote hyperbole aside, that is indeed how Microsoft sees this service — and the connective tissue here is the Microsoft Graph.

The Graph is what powers features like the Windows 10 Timeline, which desperately needs buy-in from developers to succeed, but it also allows developers to send notifications when a file is added to a OneDrive folder or to kick off an onboarding workflow when a new person is added to a team in Azure Active Directory.

At Build, Microsoft is talking about the Graph quite a bit, but it’s not announcing all that many new features for it, beyond a new and updated Teams API in the Microsoft Graph. As Microsoft’s Director for Office 365 Ecosystem Marketing Rob Howard told me, though, the company now believes that all the engagement surfaces to highlight Graph data are in place.

“Developers now have a reason to put their data into the graph,” he said. Specifically, he expects developers to make use of the Windows 10 Timeline feature, which has now rolled out with the latest Windows 10 release. He also expects that the deep integration into the Office 365 apps will provide a bit of inspiration to third-party developers.

As for Microsoft 365, the company is emphasizing the developer opportunity here. Besides more consumer-facing features like ‘Your Phone’ for sending text messages and reacting to notifications from your phone, and integrating Timeline into the company’s Android Launcher, Microsoft is also launching things like .NET Core 3.0 today, an update to the MSIX packaging format for shipping large applications, Sets in Windows 10 and support for Adaptive Cards within Microsoft 365.

This last one may just be the most interesting of these (except for maybe Sets) because users will soon see these cards pop up across Microsoft’s applications. Adaptive Cards is a standard that Microsoft has proposed for allowing developers to describe their content and user interface in a simple JSON file. The general idea here is to enable developers to show these cards in applications like Teams and Outlook to allow their users to take actions right within those applications. That could mean paying a bill right inside of Outlook, for example, or accepting a pull request from GitHub in Teams.