I think most of us have had this experience, especially when you’re in a big city: you step off of public transit, take a peek at Google Maps to figure out which way you’re supposed to go… and then somehow proceed to walk two blocks in the wrong direction.

Maybe the little blue dot wasn’t actually in the right place yet. Maybe your phone’s compass was bugging out and facing the wrong way because you’re surrounded by 30-story buildings full of metal and other things that compasses hate.

Google Maps’ work-in-progress augmented reality mode wants to end that scenario, drawing arrows and signage onto your camera’s view of the real world to make extra, super sure you’re heading the right way. It compares that camera view with its massive collection of Street View imagery to try to figure out exactly where you’re standing and which way you’re facing, even when your GPS and/or compass might be a little off. It’s currently in alpha testing, and I spent some hands-on time with it this morning.

A little glimpse of what it looks like in action:

Google first announced AR walking directions about nine months ago at its I/O conference, but has been pretty quiet about it since. Much of that time has been spent figuring out the subtleties of the user interface. If they drew a specific route on the ground, early users tried to stand directly on top of the line when walking, even if it wasn’t necessary or safe. When they tried to use particle effects floating in the air to represent paths and curves, a Google UX designer tells us, one user asked why they were ‘following floating trash’.

The Maps team also learned that no one wants to hold their phone up very long. The whole experience has to be pretty quick, and is designed to be used in short bursts — in fact, if you hold up the camera for too long, the app will tell you to stop.

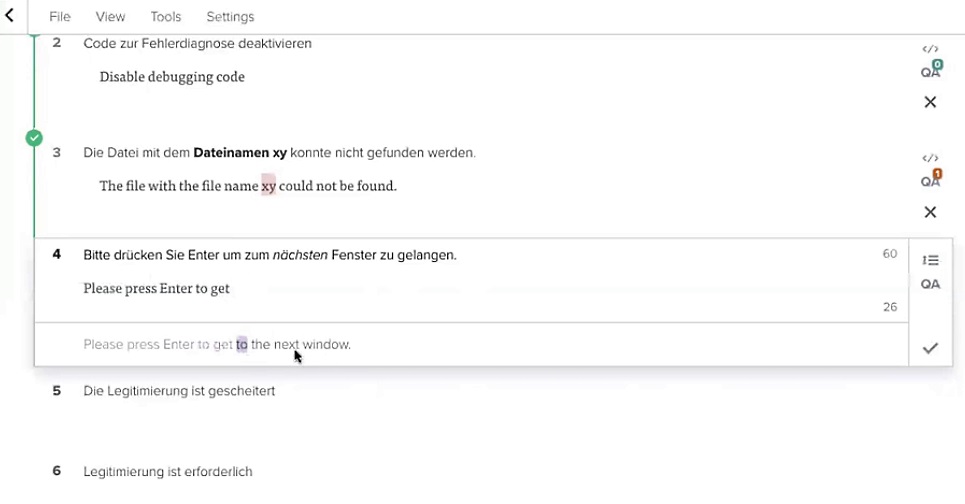

Firing up AR mode feels like starting up any other Google Maps trip. Pop in your destination, hit the walking directions button… but instead of “Start”, you tap the new “Start AR” button.

A view from your camera appears on screen, and the app asks you to point the camera at buildings across the street. As you do so, a bunch of dots will pop up as it recognizes building features and landmarks that might help it pinpoint your location. Pretty quickly — a few seconds, in our handful of tests — the dots fade away, and a set of arrows and markers appear to guide your way. A small cut-out view at the bottom shows your current location on the map, which does a pretty good job of making the transition from camera mode to map mode a bit less jarring.

When you drop the phone to a more natural position – closer to parallel with the ground, like you might hold it if you’re reading texts while you walk — Google Maps will shift back into the standard 2D map view. Hold up the phone like you’re taking a portrait photo of what’s in front of you, and AR mode comes back in.

In our short test (about 45 minutes in all), the feature worked as promised. It definitely works better in some scenarios than others; if you’re closer to the street and thus have a better view of the buildings across the way, it works out its location pretty quick and with ridiculous accuracy. If you’re in the middle of a plaza, it might take a few seconds longer.

Google’s decision to build this as something that you’re only meant to use for a few seconds is the right one. Between making yourself an easy target for would-be phone thieves or walking into light poles, no one wants to wander a city primarily through the camera lens of their phone. I can see myself using it the first step or two of a trek to make sure I’m getting off on the right foot, at which point an occasional glance at the standard map will hopefully suffice. It’s about helping you feel more certain, not about holding your hand the entire way.

Google did a deeper dive on how the tech works here, but in short: it’s taking the view from your camera and sending a compressed version up to the cloud, where it’s analyzed for unique visual features. Google has a good idea of where you are from your phones’ GPS signal, so it can compare the Street View data it has for the surrounding area to look for things it thinks should be nearby — certain building features, statues, or permanent structures — and work backwards to your more precise location and direction. There’s also a bunch of machine learning voodoo going on here to ignore things that might be prominent but not necessarily permanent (like trees, large parked vehicles, and construction.)

The feature is currently rolling out to “Local Guides” for feedback. Local Guides are an opt-in group of users who contribute reviews, photos, and places while helping Google fact check location information in exchange for early access to features like this.

Alas, Google told us repeatedly that it has no idea when it’ll roll out beyond that group.

Initial live content that LinkedIn hopes to broadcast lines up with the kind of subject matter you might already see in LinkedIn’s news feed: the plan is to cover conferences, product announcements, Q&As and other events led by influencers and mentors, office hours from a big tech company, earnings calls, graduation and awards ceremonies, and more.

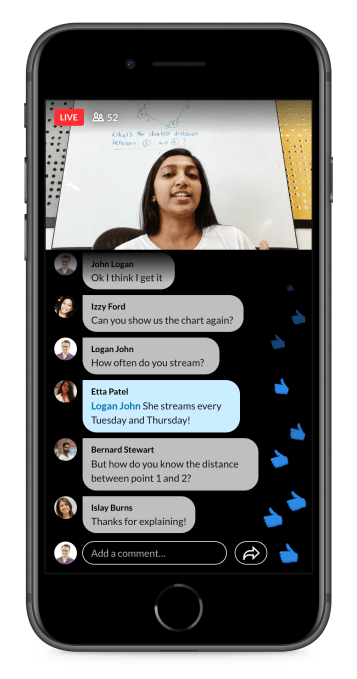

Initial live content that LinkedIn hopes to broadcast lines up with the kind of subject matter you might already see in LinkedIn’s news feed: the plan is to cover conferences, product announcements, Q&As and other events led by influencers and mentors, office hours from a big tech company, earnings calls, graduation and awards ceremonies, and more. “Live has been the most requested feature,” he said. These other social platforms are serving as a template of sorts: as with these other platforms, users can “like” videos as they are being broadcast, with the likes floating along the screen. Viewers can ask questions or make suggestions in the comments in real-time. Hosts can moderate those comments in real-time, too, to remove harassing or other messages, Davies added.

“Live has been the most requested feature,” he said. These other social platforms are serving as a template of sorts: as with these other platforms, users can “like” videos as they are being broadcast, with the likes floating along the screen. Viewers can ask questions or make suggestions in the comments in real-time. Hosts can moderate those comments in real-time, too, to remove harassing or other messages, Davies added.