Sidewalk Infrastructure Partners, the investment firm which spun out of Alphabet’s Sidewalk Labs to fund and develop the next generation of infrastructure, has unveiled its latest project — Reslia, which focuses on upgrading the efficiency and reliability of power grids.

Through a $20 million equity investment in the startup OhmConnect, and an $80 million commitment to develop a demand response program leveraging OhmConnect’s technology and services across the state of California, Sidewalk Infrastructure Partners intends to plant a flag for demand-response technologies as a key pathway to ensuring stable energy grids across the country.

‘We’re creating a virtual power plant,” said Sidewalk Infrastructure Partners co-CEO, Jonathan Winer. “With a typical power plant … it’s project finance, but for a virtual power plant… We’re basically going to subsidize the rollout of smart devices.”

The idea that people will respond to signals from the grid isn’t a new one, as Winer himself acknowledged in an interview. But the approach that Sidewalk Infrastructure Partners is taking, alongside OhmConnect, to roll out the incentives to residential customers through a combination of push notifications and payouts, is novel. “The first place people focused is on commercial and industrial buildings,” he said.

What drew Sidewalk to the OhmConnect approach was the knowledge of the end consumer that OhmConnect’s management team brought to the table The company’s chief technology officer was the former chief technology officer of Zynga, Winer noted.

“What’s cool about the OhmConnect platform is that it empowers participation,” Winer said. “Anyone can enroll in these programs. If you’re an OhmConnect user and there’s a blackout coming, we’ll give you five bucks if you turn down your thermostat for the next two hours.”

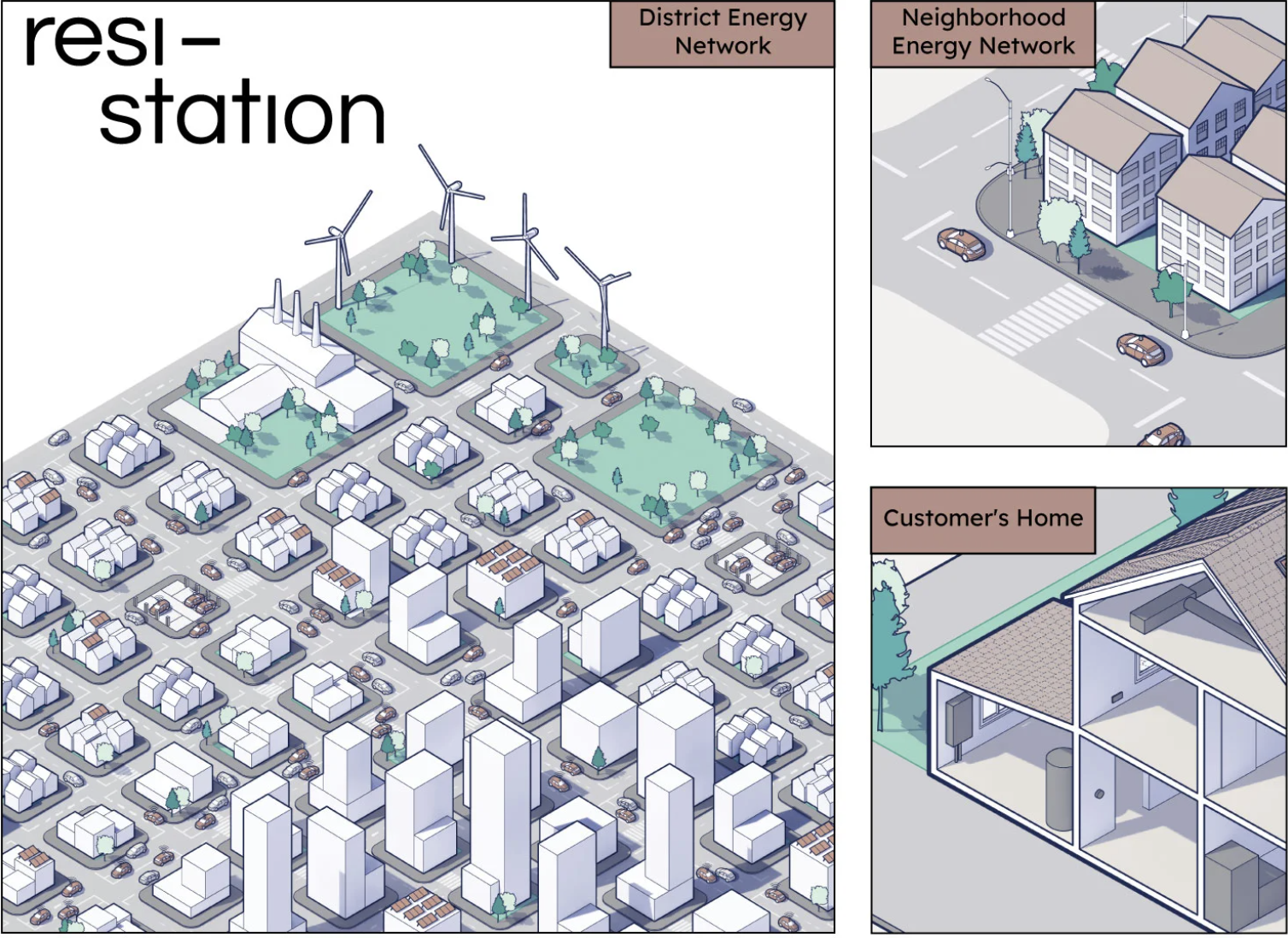

Illustration of Sidewalk Infrastructure Partners Resilia Power Plant. Image Credit: Sidewalk Infrastructure Partners

The San Francisco-based demand-response company already has 150,000 users on its platform, and has paid out something like $1 million to its customers during the brownouts and blackouts that have roiled California’s electricity grid over the past year.

The first collaboration between OhmConnect and Sidewalk Infrastructure Partners under the Resilia banner will be what the companies are calling a “Resi-Station” — a 550 megawatt capacity demand response program that will use smart devices to power targeted energy reductions.

At full scale, the companies said that the project will be the largest residential virtual power plant in the world.

“OhmConnect has shown that by linking together the savings of many individual consumers, we can reduce stress on the grid and help prevent blackouts,” said OhmConnect CEO Cisco DeVries. “This investment by SIP will allow us to bring the rewards of energy savings to hundreds of thousands of additional Californians – and at the same time build the smart energy platform of the future.”

California’s utilities need all the help they can get. Heat waves and rolling blackouts spread across the state as it confronted some of its hottest temperatures over the summer. California residents already pay among the highest residential power prices in the counry at 21 cents per kilowatt hour, versus a national average of 13 cents.

During times of peak stress earlier in the year, OhmConnect engaged its customers to reduce almost one gigawatt hour of total energy usage. That’s the equivalent of taking 600,000 homes off the grid for one hour.

If the Resilia project was rolled out at scale, the companies estimate they could provide 5 gigawatt hours of energy conservation — that’s the full amount of the energy shortfall from the year’s blackouts and the equivalent of not burning 3.8 million pounds of coal.

Going forward, the Resilia energy efficiency and demand response platform will scale up other infrastructure innovations as energy grids shift from centralized power to distributed, decentralized generation sources, the company said. OhmConnect looks to be an integral part of that platform.

“The energy grid used to be uni-directional.. .we believe that in the near future the grid is going to be become bi-directional and responsive,” said Winer. “With our approach, this won’t be one investment. We’ll likely make multiple investments. [Vehicle-to-grid], micro-grid platforms, and generative design are going to be important.”