Launching things to space doesn’t have to mean firing a large rocket vertically using massive amounts of rocket-fuel powered thrust – startup Aevum breaks the mould in multiple ways, with an innovative launch vehicle design that combines uncrewed aircraft with horizontal take-off and landing capabilities, with a secondary stage that deploys at high altitude and can take small payloads the rest of the way to space.

Aevum’s model actually isn’t breaking much new ground in terms of its foundational technology, according to founder and CEO Jay Skylus, who I spoke to prior to today’s official unveiling of the startup’s Ravn X launch vehicle. Skylus, who previously worked for a range of space industry household names and startups including NASA, Boeing, Moon Express and Firefly, told me that the startup has focused primarily on making the most of existing available technologies to create a mostly reusable, fully automated small payload orbital delivery system.

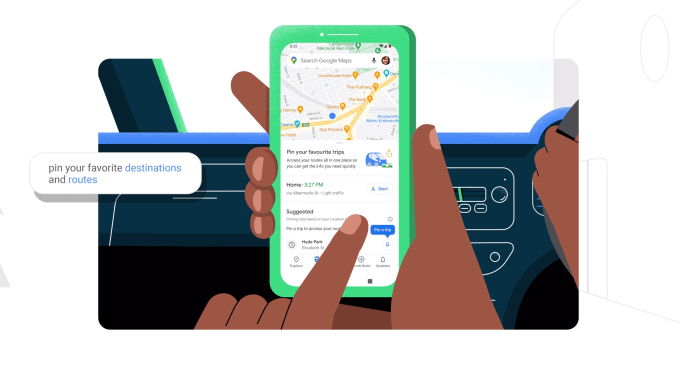

To his point, Ravn X doesn’t look too dissimilar from existing jet aircraft, and bears obvious resemblance to the Predator line of UAVs already in use for terrestrial uncrewed flight. The vehicle is 80 feet long, and has a 60-foot wingspan, with a total max weight of 55,000 lbs including payload. 70% of the system is fully reusable today, and Skylus says that the goal is to iterate on that to the point where 95% of the launch system will be reusable in the relatively near future.

Image Credits: Aevum

Ravn X’s delivery system is design for rapid response delivery, and is able to get small satellites to orbit in as little as 180 minutes – with the capability of having it ready to fly and deliver another again fairly shortly after that. It uses traditional jet fuel, the same kind used on commercial airliners, and it can take off and land in “virtually any weather,” according to Skylus. It also takes off and lands on any 1-mile stretch of traditional aircraft runway, meaning it can theoretically use just about any active airport in the world as a launch and landing site.

One of they key defining differences of Aevum relative to other space launch startups is that what they’re presenting isn’t theoretical, or in development – the Ravn X already has paying customers, including over $1 billion in U.S. government contracts. It’s first mission is with the U.S. Space Force, the ASLON-45 small satellite launch mission, and it also has a contract for 20 missions spanning 9 years with the U.S. Air Force Space and Missile Systems Center. Deliveries of Aevum’s production launch vehicles to its customers have already begun, in fact, Skylus says.

The U.S. Department of Defense has been actively pursuing space launch options that provide it with responsive, short turnaround launch capabilities for quite some time now. That’s the same goal that companies like Astra, which was originally looking to win the DARPA challenge for such systems (since expired) with its Rocket small launcher. Aevum’s system has the added advantage of being essentially fully compatible with existing airfield infrastructure – and also of not requiring that human pilots be involved or at risk at all, as they are with the superficially similar launch model espoused by Virgin Orbit.

Aevum isn’t just providing the Ravn X launcher, either; its goal is to handle end-to-end logistics for launch services, including payload transportation and integration, which are parts of the process that Skylus says are often overlooked or underserved by existing launch providers, and that many companies creating payloads also don’t realize are costly, complicated and time-consuming parts of actually delivering a working small satellite to orbit. The startup also isn’t “re-inventing the wheel” when it comes to its integration services – Skylus says they’re working with a range of existing partners who all already have proven experience doing this work but who haven’t previously had the motivation or the need to provide these kinds of services to the customers that Skylum sees coming online, both in the public and private sector.

The need isn’t for another SpaceX, Skylus says; rather, thanks to SpaceX, there’s a wealth of aerospace companies who previously worked almost exclusively with large government contracts and the one or two massive legacy rocket companies to put missions together. They’re now open to working with the greatly expanded market for orbital payloads, including small satellites that aim to provide cost-effective solutions in communications, environmental monitor, shipping and defense.

Aevum’s solution definitely sounds like it addresses a clear and present need, in a way that offers benefits in terms of risk profile, reusability, cost and flexibility. The company’s first active missions will obviously be watched closely, by potential customers and competitors alike.