Salil Deshpande

Contributor

Salil Deshpande serves as the managing director of

Bain Capital Ventures. He focuses on infrastructure software and open source.

More posts by this contributor

There’s a dark cloud on the horizon. The behavior of cloud infrastructure providers, such as Amazon, threatens the viability of open source.

During 13 years as a venture investor, I have invested in the companies behind many open-source projects:

Open source has served society, and open-source business models have been successful and lucrative. Life was good.

Amazon’s behavior

I admire Amazon’s execution. In the venture business we are used to the large software incumbents (such as IBM, Oracle, HP, Compuware, CA, EMC, VMware, Citrix and others) being primarily big sales and distribution channels, which need to acquire innovation (i.e. startups) to feed their channel. Not Amazon. In July 2015, The Wall Street Journal quoted me as saying, “Amazon executes too well, almost like a startup. This is scary for everyone in the ecosystem.” That month, I wrote Fear The Amazon Juggernaut on investor site Seeking Alpha. AMZN is up 400 percent since I wrote that article. (I own AMZN indirectly.)

But to anyone other than its customers, Amazon is not a warm and fuzzy company. Numerous articles have detailed its bruising and cutthroat culture. Why would its use of open source be any different?

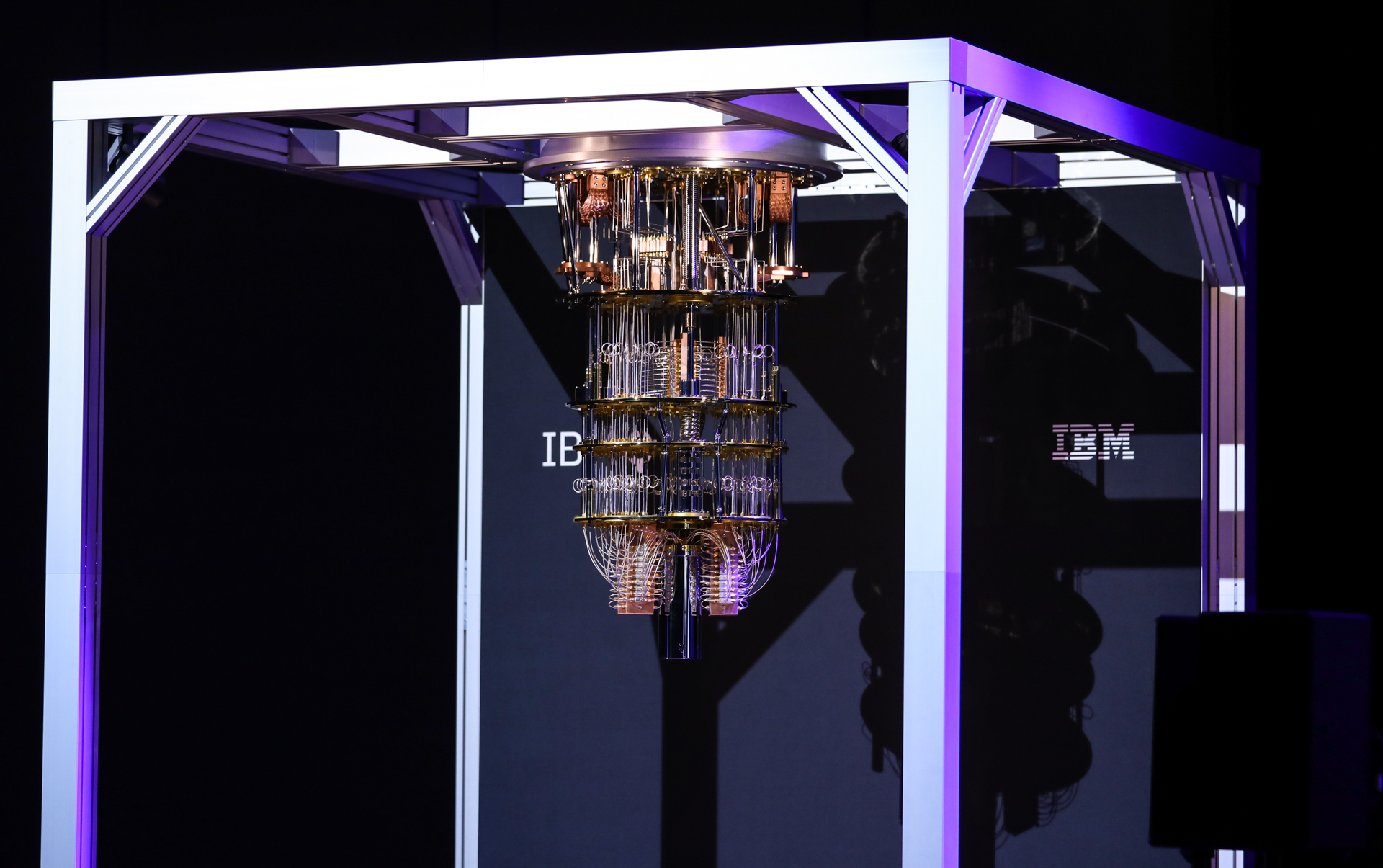

Go to Amazon Web Services (AWS) and hover over the Products menu at the top. You will see numerous open-source projects that Amazon did not create, but runs as-a-service. These provide Amazon with billions of dollars of revenue per year.

For example, Amazon takes Redis (the most loved database in StackOverflow’s developer survey), gives very little back, and runs it as a service, re-branded as AWS Elasticache. Many other popular open-source projects including, Elasticsearch, Kafka, Postgres, MySQL, Docker, Hadoop, Spark and more, have similarly been taken and offered as AWS products.

To be clear, this is not illegal. But we think it is wrong, and not conducive to sustainable open-source communities.

Commons Clause

In early 2018, I gathered together creators, CEOs or chief counsels of two dozen at-scale open-source companies, some of them public, to talk about what to do. In March I spoke to GeekWire about this effort. After a lot of constructive discussion the group decided that rather than beat around the bush with mixing and matching open-source licenses to discourage such behavior, we should create a straightforward clause that prohibits the behavior. We engaged respected open-source lawyer Heather Meeker to draft this clause.

In August 2018 Redis Labs announced their decision to add this rider (i.e. one additional paragraph) known as the Commons Clause to their liberal open-source license for certain add-on modules. Redis itself would remain on the permissive BSD license — nothing had changed with Redis itself! But the Redis Labs add-on modules will include the Commons Clause rider, which makes the source code available, without the ability to “sell” the modules, where “sell” includes offering them as a commercial service. The goal is to explicitly prevent the bad behavior of cloud infrastructure providers.

Anybody else, including enterprises like General Motors or General Electric, can still do all the things they used to be able to do with the software, even with Commons Clause applied to it. They can view and modify the source code and submit pull-requests to get their modifications into the product. They can even offer the software as-a-service internally for employees. What Commons Clause prevents is the running of a commercial service with somebody else’s open-source software in the manner that cloud infrastructure providers do.

This announcement has — unsurprisingly, knowing the open-source community — prompted spirited responses, both favorable and critical. At the risk of oversimplifying: those in favor view this as a logical and positive evolution in open-source licensing that allows open-source companies to run viable businesses while investing in open-source projects. Michael DeHaan, creator of Ansible, in Why Open Source Needs New Licenses, put one part particularly well:

We see people running open source “foundations” and web sites that are essentially talking heads, spewing political arguments about the definition of “open source” as described by something called “The Open Source Initiative”, which contains various names which have attained some level of popularity or following. They attempt to state that such a license where the source code is freely available, but use cases are limited, are “not open source”. Unfortunately, that ship has sailed.

Those neutral or against point out that the Commons Clause makes software not open source, which is accurate, and that making parts of the code base proprietary is against the ethos of open source; and Redis Labs must be desperate and having trouble making money.

First, do not worry about Redis Labs. The company is doing very, very well. And Redis is stronger, more loved and more BSD than ever before.

More importantly, we think it is time to reexamine the ethos of open source in today’s environment. When open source became popular, it was designed for practitioners to experiment with and build on, while contributing back to the community. No company was providing infrastructure as a service. No company was taking an open-source project, re-branding it, running it as a service, keeping the profits and giving very little back.

Our view is that open-source software was never intended for cloud infrastructure companies to take and sell. That is not the original ethos of open source. Commons Clause is reviving the original ethos of open source. Academics, hobbyists or developers wishing to use a popular open-source project to power a component of their application can still do so. But if you want to take substantially the same software that someone else has built, and offer it as a service, for your own profit, that’s not in the spirit of the open-source community.

As it turns out in the case of the Commons Clause, that can make the source code not technically open source. But that is something we must live with, to preserve the original ethos.

Apache + Commons Clause

Redis Labs released certain add-on modules as Apache + Commons Clause. Redis Labs made amply clear that the application of Commons Clause made them not open source, and that Redis itself remains open source and BSD-licensed.

Some rabid open-source wonks accused Redis Labs of trying to trick the community into thinking that modules were open source, because they used the word “Apache.” (They were reported to be foaming at the mouth while making these accusations, but in fairness it could have been just drool.)

There’s no trick. The Commons Clause is a rider that is to be attached to any permissive open-source license. Because various open-source projects use various open-source licenses, when releasing software using Commons Clause, one must specify to which underlying permissive open-source license one is attaching Commons Clause.

Why not AGPL?

There are two key reasons to not use AGPL in this scenario, an open-source license that says that you must release to the public any modifications you make when you run AGPL-licensed code as a service.

First, AGPL makes it inconvenient but does not prevent cloud infrastructure providers from engaging in the abusive behavior described above. It simply says that they must release any modifications they make while engaging in such behavior. Second, AGPL contains language about software patents that is unnecessary and disliked by a number of enterprises.

Many of our portfolio companies with AGPL projects have received requests from large enterprises to move to a more permissive license, since the use of AGPL is against their company’s policy.

Balance

Cloud infrastructure providers are not bad guys or acting with bad intentions. Open source has always been a balancing act. Many of us believe in our customers and peers seeing our source code, making improvements and sharing back. It’s always a leap of faith to distribute one’s work product for free and to trust that you’ll be able to put food on the table. Sometimes, with some projects, a natural balance occurs without much deliberate effort. But at other times, the natural balance does not occur: We are seeing this more and more with infrastructure open source, especially as cloud infrastructure providers seek to differentiate by moving up the stack from commodity compute and storage to higher level infrastructure services.

Revisions

The Commons Clause as of this writing is at version 1.0. There will be revisions and tweaks in the future to ensure that Commons Clause implements its goals. We’d love your input.

Differences of opinion on Commons Clause that we have seen expressed so far are essentially differences of philosophy. Much criticism has come from open-source wonks who are not in the business of making money with software. They have a different philosophy, but that is not surprising, because their job is to be political activists, not build value in companies.

Some have misconstrued that it prevents people from offering maintenance, support or professional services. This is a misreading of the language. Some have claimed that it conflicts with AGPL. Commons Clause is intended to be used with open-source licenses that are more permissive than AGPL, so that AGPL does not have to be used! Still, even with AGPL, few users of an author’s work would deem it prudent to simply disregard an author’s statement of intent to apply Commons Clause.

Protecting open source

Some open-source stakeholders are confused. Whose side should they be on? Commons Clause is new, and we expected debate. The people behind this initiative are committed open-source advocates, and our intent is to protect open source from an existential threat. We hope others will rally to the cause, so that open-source companies can make money, open source can be viable and open-source developers can get paid for their contributions.