At Google I/O today Google Cloud announced Vertex AI, a new managed machine learning platform that is meant to make it easier for developers to deploy and maintain their AI models. It’s a bit of an odd announcement at I/O, which tends to focus on mobile and web developers and doesn’t traditionally feature a lot of Google Cloud news, but the fact that Google decided to announce Vertex today goes to show how important it thinks this new service is for a wide range of developers.

The launch of Vertex is the result of quite a bit of introspection by the Google Cloud team. “Machine learning in the enterprise is in crisis, in my view,” Craig Wiley, the director of product management for Google Cloud’s AI Platform, told me. “As someone who has worked in that space for a number of years, if you look at the Harvard Business Review or analyst reviews, or what have you — every single one of them comes out saying that the vast majority of companies are either investing or are interested in investing in machine learning and are not getting value from it. That has to change. It has to change.”

Wiley, who was also the general manager of AWS’s SageMaker AI service from 2016 to 2018 before coming to Google in 2019, noted that Google and others who were able to make machine learning work for themselves saw how it can have a transformational impact, but he also noted that the way the big clouds started offering these services was by launching dozens of services, “many of which were dead ends,” according to him (including some of Google’s own). “Ultimately, our goal with Vertex is to reduce the time to ROI for these enterprises, to make sure that they can not just build a model but get real value from the models they’re building.”

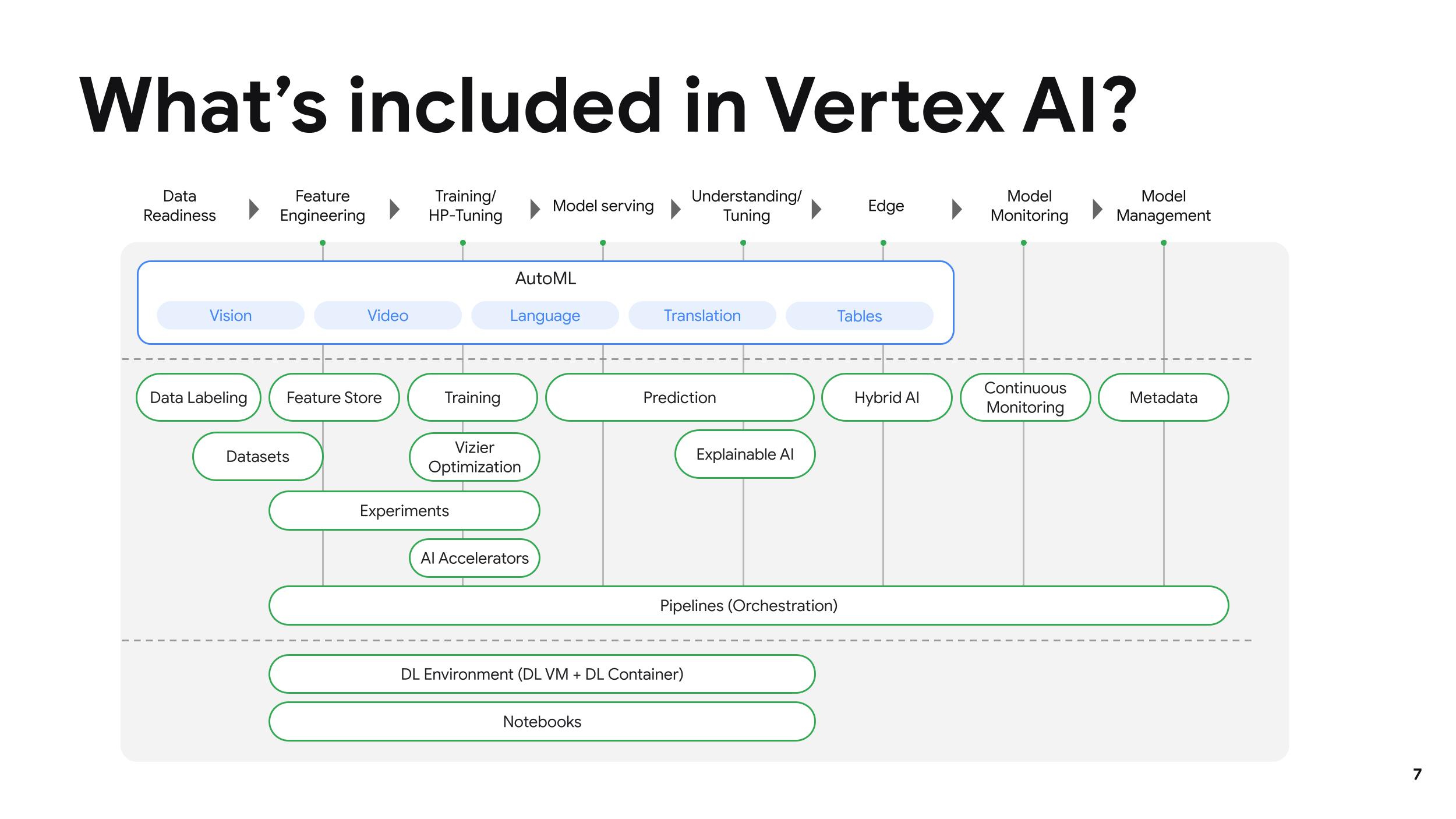

Vertex then is meant to be a very flexible platform that allows developers and data scientist across skill levels to quickly train models. Google says it takes about 80% fewer lines of code to train a model versus some of its competitors, for example, and then help them manage the entire lifecycle of these models.

The service is also integrated with Vizier, Google’s AI optimizer that can automatically tune hyperparameters in machine learning models. This greatly reduces the time it takes to tune a model and allows engineers to run more experiments and do so faster.

Vertex also offers a “Feature Store” that helps its users serve, share and reuse the machine learning features and Vertex Experiments to help them accelerate the deployment of their models into producing with faster model selection.

Deployment is backed by a continuous monitoring service and Vertex Pipelines, a rebrand of Google Cloud’s AI Platform Pipelines that helps teams manage the workflows involved in preparing and analyzing data for the models, train them, evaluate them and deploy them to production.

To give a wide variety of developers the right entry points, the service provides three interfaces: a drag-and-drop tool, notebooks for advanced users and — and this may be a bit of a surprise — BigQuery ML, Google’s tool for using standard SQL queries to create and execute machine learning models in its BigQuery data warehouse.

“We had two guiding lights while building Vertex AI: get data scientists and engineers out of the orchestration weeds, and create an industry-wide shift that would make everyone get serious about moving AI out of pilot purgatory and into full-scale production,” said Andrew Moore, vice president and general manager of Cloud AI and Industry Solutions at Google Cloud. “We are very proud of what we came up with in this platform, as it enables serious deployments for a new generation of AI that will empower data scientists and engineers to do fulfilling and creative work.”