Welcome to TechCrunch’s 2019 Holiday Gift Guide! Need help with gift ideas? We’re here to help! We’ll be rolling out gift guides from now through the end of December, so check back regularly.

Once again, TechCrunch has asked me to put together a list of travel-friendly gadgets, and once again, I find myself between back-to-back international flights. If nothing else, all of the travel for this gig has made me much better at figuring out what to pack and what to leave behind.

There’s a science to traveling. It requires a lot of trial and error to maintain your sanity through 12 hour flights, powering through jet lag and generally making it through in one piece. Common wisdom posits that it’s the journey, not the destination that counts, but when it comes to the rigors of being in the air and on the road half your life, the journey is usually the worst part.

I’ve narrowed the list down to ten, but there was plenty of stuff I could have included. A good spare battery pack is always a must. There are a million good, cheap ones online. I’ve been carrying around an OmniCharge, myself. Ditto for solid wheeled suitcase. You can spend a ton of money on an Away bag, or you can can buy three bags at a fraction of the price.

Chromebooks are worth a look, as well, for battery life alone. I took the Pixelbook Go with me to China earlier this month. They’re also great for security reasons. Oh, and I won’t include it because it’s a terrible gift, but far and away my most important travel companion is a pack of Wet Ones hand wipes. Hand sanitizer is fine, but if you’re not wiping down your phone a couple of times a day while traveling, you’re not doing yourself any favors.

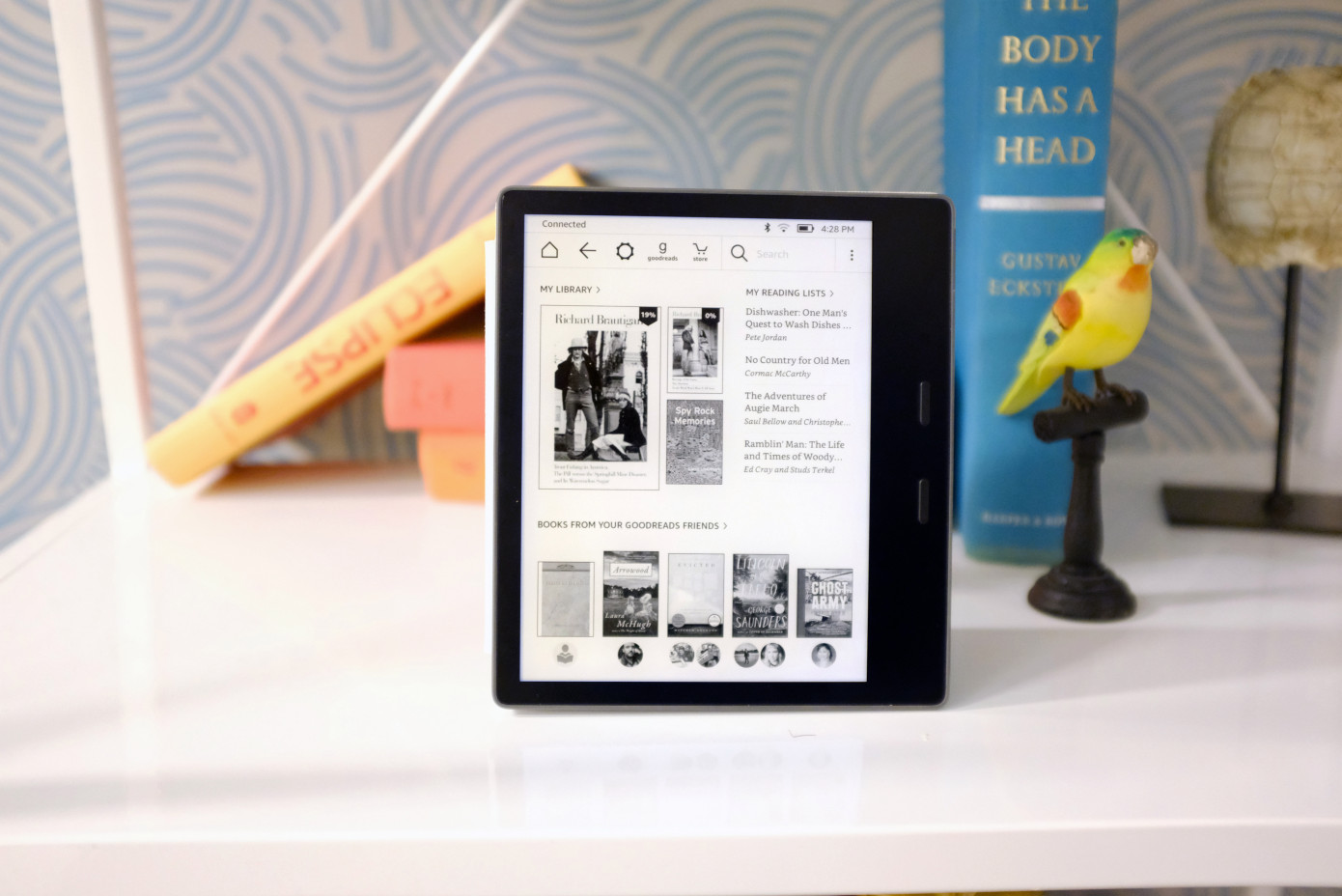

I’ve mostly attempted to avoid repeats from last year, but I couldn’t not include the Oasis. An e-reader is absolutely invaluable for travels. The Oasis is the best on the market and the new version (while largely the same) gets a few tweaks, including an E Ink display with a faster refresh rate and adjustable colors on the front light to reduce blue light. That’s key when attempting to adjust to a new timezone. Also, shoutout to Calibre, a fantastic open-source e-book software I use to convert e-pub files to read on my Kindle.

Everyone knows the importance of traveling with a good battery pack. But what of a plug? Anker’s PowerPort Atom is tiny and useful for a MacBook Pro 13 inch or smaller. At 30W, it packs a punch for its tiny size. That’s key for navigating around crowded outlets, not to mention the plugs under your seat on the plane. I’ve been using a Pixelbook charger as a go-to plug, but the weight of the thing means it’s constantly falling out.

Obvious choice, I realize, but I’m actually not including the iPad for the obvious reason. The arrival of Sidecar on MacOS Catalina has made the iPad Pro a terrific second screen for the Mac, in addition to all of its usual tablet functionality. I use it all the time for working on the road. Carrying an actual second monitor in a suitcase is an obvious non-starter, so the iPad works well in a pinch.

I wasn’t prepared for how much I’d love this little gimbal. It’s terrific when tethered to a phone or on its own. Smartphone cameras are really quite good these days, and I imagine most people leave the standalones at home for a trip. The Osmo’s small size makes it perfect for throwing in a backpack, and the things it can do are really quite stunning.

Full disclosure: I used to be too embarrassed to wear a sleep mask on a plane. Enough international flights, however, and you’ll start to get over it pretty quickly. The Dreamlight ease is the best and most comfortable i’ve used to date. It’s made of form fitting, stretchable material, combined with “3D facial mapping” tech that comfortable conforms to the face without letting light in underneath. I actually wear it at home sometimes. Also, the side padding makes it much more comfortable to lean your head against the plane window.

LARQ Self Cleaning Water Bottle

Plastic bottles are bad. This much we know. And SFO recently banned their sale. Bring a water bottle to stay hydrated on the road and maybe cut down on some waste in the process. The $100 price tag is pretty lofty as far as these things go, but as someone who recently found a small forest growing in my metal work bottle, the addition of a self-cleaning element seems worth the cost.

Nintendo Switch Lite/Switch Online

I’ve been waiting for a Switch Lite ever since the original Switch was unveiled three year back. It’s the perfect size for travel, and the attached Joy-Cons mean you don’t have to worry about them coming loose in your bag. It’s great for flights and lonely hotel stays. The battery life leaves a bit to be desire, but it’s otherwise a fantastic handheld. Pair it with the ridiculously cheap Switch Online, and you’ve got access to a ton of original NES and SNES titles. A Link to the Past, anyone?

I admit I chose these before having a chance to properly test out the similarly priced AirPods Pro. That said, I can still wholly heartedly recommend Sony’s based on terrific sound quality and noise cancelation that’s perfect for the plane. Powerbeats Pro get a hearty recommendation as well, for battery life and comfort. Just don’t forget to bring a wired set for the plane entertainment system.

I tested this backpack on a trip to Tokyo around this time last year, and I’m still smitten. It’s easily the best travel backpack I’ve ever owned, courtesy of plenty of pockets and an expandable body. It’s well made, rugged and nice to look at, making for a perfect carry-on companion.

Tripley Compression Packing Cubes

Okay, okay, not a gadget, I realize, but invaluable nonetheless. When I first started traveling a lot for work, I was wavering between packing cubes and compression bags. One is great for organization and keeping clothes (relatively) unwrinkled. The other is terrific for space. Tripley product is a nice compromise between the two. Just make sure not to get that zipper jammed.

“The laziest merch ever” one TechCrunch staffer said. “If only there was 40 years of Star Wars Merchandise as a precedent. They would sell ten billion yoda beanie babies” quipped another. The lack of a plush doll, baby clothes, chew-safe rubber toys for tots and dogs, or original artwork indicate Disney was so busy getting its streaming service off the ground that it didn’t realize it already had a mascot. Yoda backpacks have been a hit for decades. Where’s the Yoda baby bjorn chest pack?

“The laziest merch ever” one TechCrunch staffer said. “If only there was 40 years of Star Wars Merchandise as a precedent. They would sell ten billion yoda beanie babies” quipped another. The lack of a plush doll, baby clothes, chew-safe rubber toys for tots and dogs, or original artwork indicate Disney was so busy getting its streaming service off the ground that it didn’t realize it already had a mascot. Yoda backpacks have been a hit for decades. Where’s the Yoda baby bjorn chest pack?

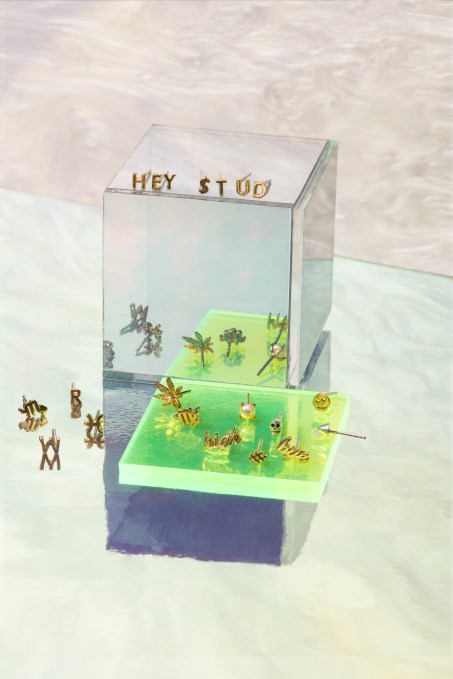

After getting pierced at Studs, customers are then directed to the website for after-care information and resources, as well as a shoppable destination for buying new products. In addition, the site is open to anyone — not just those who already got pierced at Studs’s shop.

After getting pierced at Studs, customers are then directed to the website for after-care information and resources, as well as a shoppable destination for buying new products. In addition, the site is open to anyone — not just those who already got pierced at Studs’s shop.

charges, Ambient Mode comes to life. Hear how it delivers a proactive Google Assistant experience to your

charges, Ambient Mode comes to life. Hear how it delivers a proactive Google Assistant experience to your