At the Amazon Summit in San Francisco today, the company announced a new cloud service that enables organizations to create and manage private certificates in the cloud.

While the Summit wasn’t chock full of announcements like the annual re:Invent conference, it did offer some new services like the beefing up the AWS Certificate Manager (ACM) with an all-new Private Certificate Authority (PCA). (Amazon does love its acronyms.)

Private certificates let you limit exactly who has access, giving you more control and hence greater security over them. Private certificates are usually confined to a defined group like a company or organization, but up until now it has been rather complex to create them.

As with any good cloud services, the Private Certificate Authority removes a layer of complexity involved in managing them. “ACM Private CA builds on ACM’s existing certificate capabilities to help you easily and securely manage the lifecycle of your private certificates with pay as you go pricing. This enables developers to provision certificates in just a few simple API calls while administrators have a central CA management console and fine grained access control through granular IAM policies,” Amazon’s Randall Hunt wrote in a blog post announcing the new service.

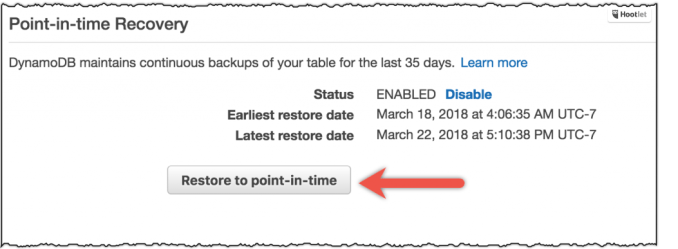

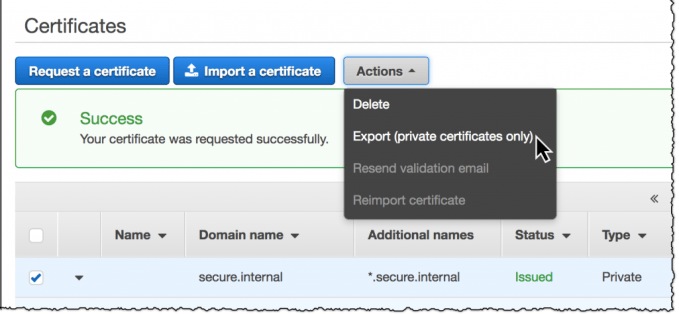

Screenshot: Amazon

The new feature lets you provision and configure certificates, then import or export them after they’ve been created. The certificates are stored on “AWS managed hardware security modules (HSMs) that adhere to FIPS 140-2 Level 3 security standards. ACM Private CA automatically maintains certificate revocation lists (CRLs) in Amazon Simple Storage Service (S3),” Hunt wrote. What’s more, admins can access reports to track certificate creation on the system.

The new service is available today and costs $400 per month per certificate authority you set up. For complete pricing details, see the blog post.