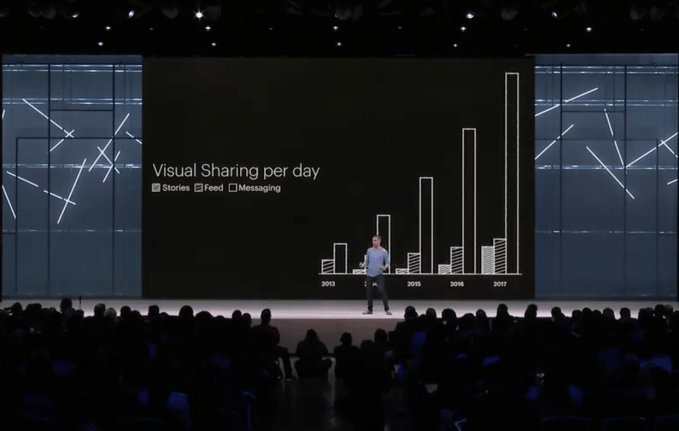

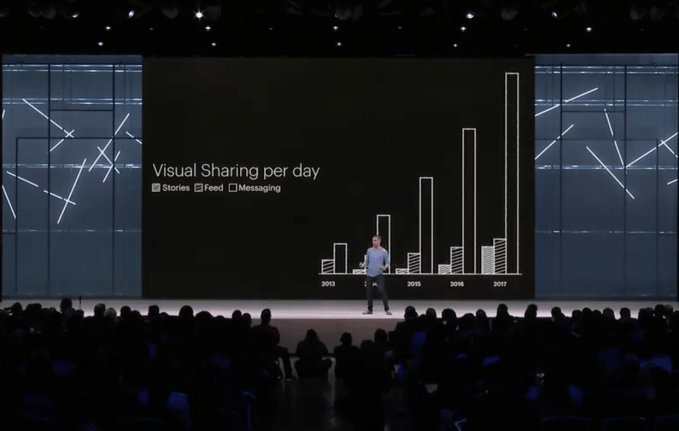

We’re at the cusp of the visual communication era. Stories creation and consumption is up 842 percent since early 2016, according to consulting firm Block Party. Nearly a billion accounts across Snapchat, Instagram, WhatsApp, Facebook, and Messenger now create and watch these vertical, ephemeral slideshows. And yesterday, Facebook chief product officer Chris Cox showed a chart detailing how “the Stories format is on a path to surpass feeds as the primary way people share things with their friends sometime next year.”

The repercussions of this medium shift are vast. Users now consider how every moment could be glorified and added to the narrative of their day. Social media platforms are steamrolling their old designs to highlight the camera and people’s Stories. And advertisers must rethink their message not as a headline, body text, and link, but as a background, overlays, and a feeling that lingers even if viewers don’t click through.

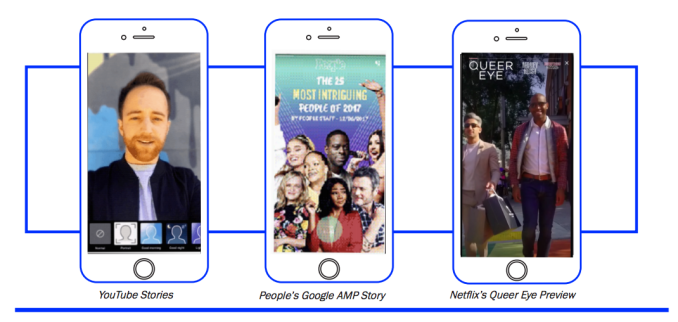

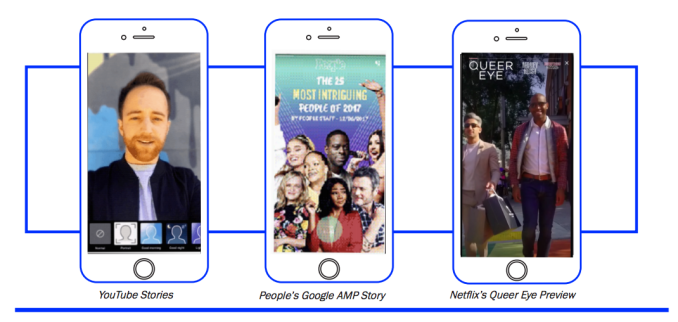

WhatsApp’s Stories now have over 450 million daily users. Instagram’s have over 300 million. Facebook Messenger’s had 70 million in September. And Snapchat as a whole just reached 191 million, about 150 million of which use Stories according to Block Party. With 970 million accounts, it’s the format of the future. Block Party calculates that Stories grew 15X faster than feeds from Q2 2016 to Q3 2017. And that doesn’t even count Google’s new AMP Stories for news, Netflix’s Stories for mobile movie previews, and YouTube’s new Stories feature.

Facebook CEO Mark Zuckerberg even admitted on last week’s earnings call that the company is focused on “making sure that ads are as good in Stories as they are in feeds. If we don’t do this well, then as more sharing shifts to Stories, that could hurt our business.” When asked, Facebook confirmed that it’s now working on monetization for Facebook Stories.

From Invention To Standard

“They deserve all the credit”, Instagram CEO Kevin Systrom told me about Snapchat when his own app launched its clone of Stories. But what sprouted as Snapchat CEO Evan Spiegel and his team reimagining the Facebook News Feed through the lens of its 10-second disappearing messages has blossomed into the dominant way to see life from someone else’s perspective. And just as Facebook and Twitter took FriendFeed and refined it with relevancy sorting, character constraints, and all manners of embedded media, the Stories format is still being perfected. “This is about a format, and how you take it to a network and put your own spin on it” Systrom followed up.

Snapchat is trying to figure out if Stories from friends and professional creators should be separate, and if they should be sorted by relevancy or reverse chronologically. Instagram and Facebook are opening Stories up to posts from third-party apps like Spotify that makes them a great way to discover music. WhatsApp is pushing the engineering limits of Stories, figuring out ways to make the high-bandwidth videos play on slow networks in the developing world.

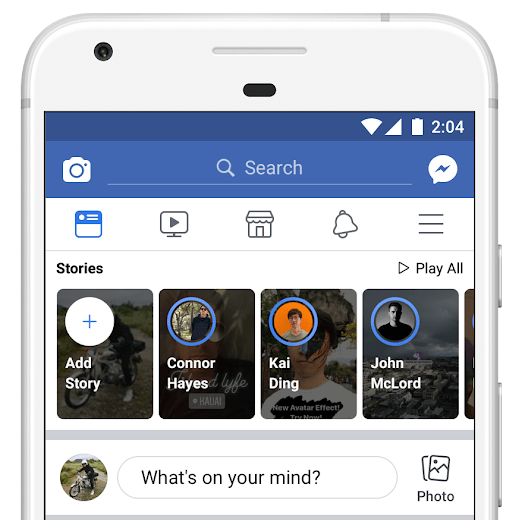

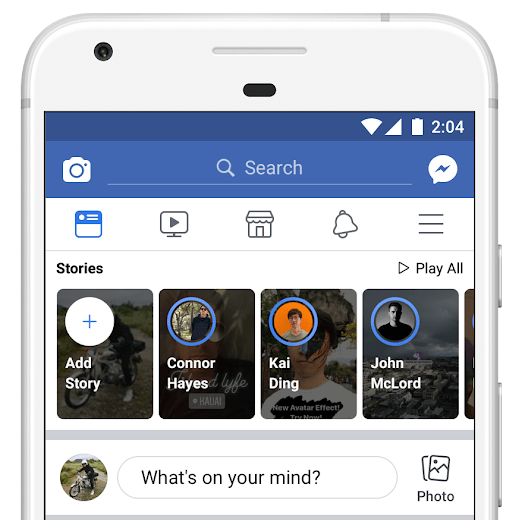

Messenger is removing its camera from the navigation menu, and settling in as a place to watch Stories shared from Facebook and Instagram. Meanwhile, Messenger is merging augmented reality, commerce, and Stories so users can preview products in AR and then either share or buy them. Facebook created a Stories carousel ad that lets businesses share a slideshow of three photos or videos together to string together a narrative. And perhaps most tellingly, Facebook is testing a new post composer for its News Feed that actually shows an active camera and camera roll preview to coerce you into sharing Stories instead of a text status. Companies who refuse the trend may be left behind.

Social Media Bedrock

As I wrote two years ago when Snapchat with the only app with Stories:

“Social media creates a window through which your friends can watch your life. Yet most social networks weren’t designed that way, because phones, screen sizes, cameras, and mobile network connections weren’t good enough to build a crystal-clear portal.

“Social media creates a window through which your friends can watch your life. Yet most social networks weren’t designed that way, because phones, screen sizes, cameras, and mobile network connections weren’t good enough to build a crystal-clear portal.

With all its text, Twitter is like peering through a crack in a fence. There are lots of cracks next to each other, but none let you see the full story. Facebook is mostly blank space. It’s like a tiny jail-cell window surrounded by concrete. Instagram was the closest thing we had. Like a quaint living room window, you can only see the clean and pretty part they want you to see.

Snapchat is the floor-to-ceiling window observation deck into someone’s life. It sees every type of communication humans have invented: video, audio, text, symbols, and drawings. Beyond virtual reality and 360 video — both tough to capture or watch on the go — it’s difficult to imagine where social media evolves from here.” It turns out that over the next two years, social media would not evolve, but instead converge on Stories.

What comes next is a race for more decorations, more augmented reality, more developers, and more extendability beyond native apps and into the rest of the web. Until we stop using cell phones all together, we’ll likely see most of sharing divided between private messaging and broadcasted Stories.

The medium is a double-edged sword for culture, though. While a much more vivid way to share and engender empathy, they also threaten to commodify life. When Instagram launched Stories, Systrom said it was because otherwise you “only get to see the highlights”.

But he downplayed how a medium for capturing more than the highlights would pressure people around the world to interrupt any beautiful scene or fit of laughter or quiet pause with their camera phone. We went from people shooting and sharing once or a few times a day to constantly. In fact, people plan their activities not just around a picture-perfect destination, but turning their whole journey into success theater.

If Stories are our new favorite tool, we must learn to wield them judiciously. Sometimes a memory is worth more than an audience. When it’s right to record, don’t get in the way of someone else’s experience. And after the Story is shot, return to the moment and save captioning and decoration for down time. Stories are social media bedrock. There’s no richer way to share, so they’re going to be around for a while. We better learn to gracefully coexist.

“Social media creates a window through which your friends can watch your life. Yet most social networks weren’t designed that way, because phones, screen sizes, cameras, and mobile network connections weren’t good enough to build a crystal-clear portal.

“Social media creates a window through which your friends can watch your life. Yet most social networks weren’t designed that way, because phones, screen sizes, cameras, and mobile network connections weren’t good enough to build a crystal-clear portal.

(@find_evil)

(@find_evil)